Subsequently, if the sub-query executed successfully without any errors or exceptions, we could assume that the sub-query is safe, thus allowing us to wrap the sub-query back into the UNLOAD parent statement, but this time replacing the bind parameters with actual user-supplied parameters (simply concatenating them), which have now been validated in the previously run SELECT query. The Parquet format is up to 2x faster to unload and consumes up to 6x less storage in Amazon S3, compared to text formats. We will see some of the ways of data import into the Redshift cluster from S3 bucket as well as data export from Redshift to an S3 bucket. You can now unload the result of an Amazon Redshift query to your Amazon S3 data lake as Apache Parquet, an efficient open columnar storage format for analytics.

#Redshift unload to s3 parquet how to

This would let us use Redshift's prepared statement support (which is indeed supported for SELECT queries) to bind and validate the potentially risky, user-supplied parameters first. 1285 DecemIn this article, we are going to learn about Amazon Redshift and how to work with CSV files.

While trying to devise a workaround for this, a colleague of mine has thought up a workaround: instead of binding the parameters into the UNLOAD query itself (which is not supported by Redshift), we could simply bind them to the inner sub-query inside the UNLOAD's ( ) first (which happens to be a SELECT query - which is probably the most common subquery used within UNLOAD statements by most Redshift users, I'd say) and run this sub-query first, perhaps with a LIMIT 1 or 1=0 condition, to limit its running time. pandas on AWS - Easy integration with Athena, Glue, Redshift, Timestream, Neptune, OpenSearch, QuickSight, Chime, CloudWatchLogs, DynamoDB, EMR, SecretManager. You can use the CREATE EXTERNAL FUNCTION command to create user-defined functions that invoke functions from AWS Lambda.

#Redshift unload to s3 parquet code

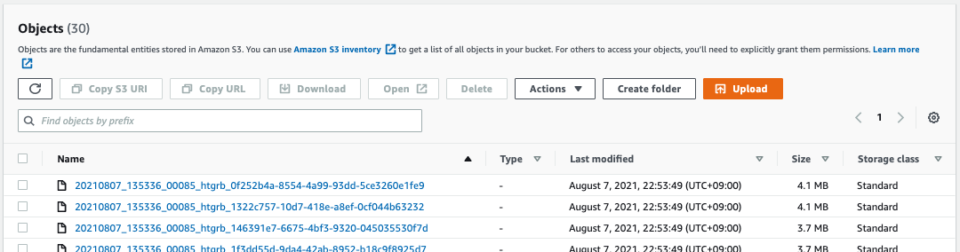

Error message/stack trace: Any other details that can be helpful: the test code works when all parameters are hardcoded. You can use the COPY command to load (or import) data into Amazon Redshift and the UNLOAD command to unload (or export) data from Amazon Redshift. Method 1: Unload Data from Amazon Redshift to S3 using the UNLOAD command Method 2: Unload Data from Amazon Redshift to S3 in Parquet Format Limitations of Unloading Data From Amazon Redshift to S3 Conclusion In the ETL process, you load raw data into Amazon Redshift. Thanks for your quick reply, and thanks for re-raising this issue with the Redshift server team. Expected behaviour: An unload is executed successfully when a parameterized expression is used.

0 kommentar(er)

0 kommentar(er)